AI Smart Glasses vs. AR Smart Glasses: What’s the Real Difference?

Smart glasses are no longer sci-fi: two distinct but overlapping families are arriving in stores and press cycles — AI smart glasses (focused on on-device and cloud AI assistants, sensors and voice/camera workflows) and AR smart glasses (focused on visible digital overlays, heads-up displays and spatial interaction). They often share hardware (cameras, mics, bone-conduction audio, tiny compute) but solve different problems and follow different product tradeoffs. This article explains each category, compares them (with real product examples), covers Meta’s upcoming Celeste AR glasses, and outlines short-to-midterm trends you should expect.

What are AI Smart Glasses?

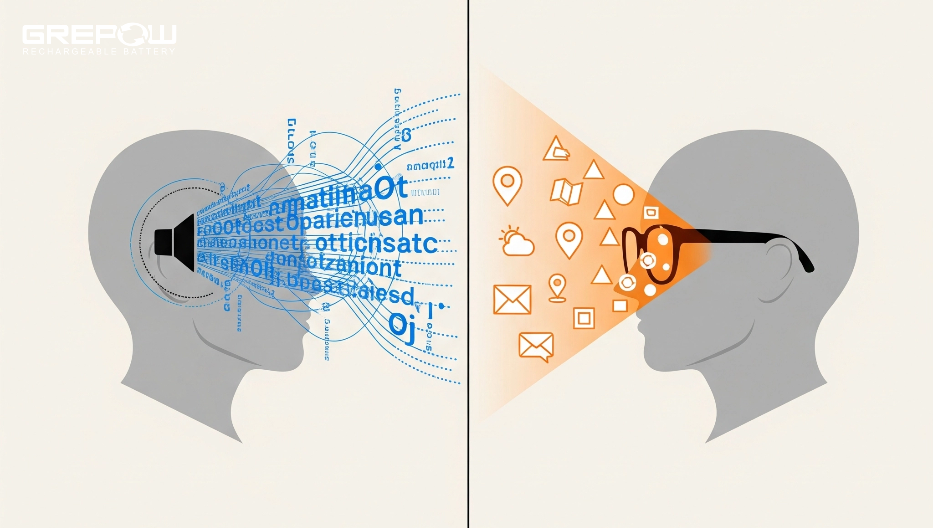

AI smart glasses are fundamentally designed to integrate artificial intelligence directly into our visual and auditory experience. Their primary function isn't necessarily to overlay digital information onto the real world in a visually immersive way (though some may have limited display capabilities), but rather to process and interpret the world around us using AI, then provide intelligent insights and assistance. Core jobs include voice queries, messaging, navigation, real‑time translation, object recognition, and frictionless photo/video capture, with design optimized for comfort, battery efficiency, and social acceptance. Think of them as a personal AI assistant embedded in your eyewear. They often feature:

●Advanced Microphones and Speakers: For natural language processing, voice commands, and audio feedback.

●Cameras: To capture visual information, which AI then analyzes for object recognition, scene understanding, and even facial recognition (with ethical considerations).

●Onboard Processors: To run AI models locally for real-time analysis, or to connect to cloud-based AI services.

●Connectivity: Bluetooth, Wi-Fi, and sometimes cellular for seamless integration with smartphones and the internet.

●Form factor: Looks like normal glasses; sub‑50 g designs are increasingly common for all‑day wear.

What are AR Smart Glasses?

AR smart glasses, on the other hand, are dedicated to Augmented Reality (AR). Their core purpose is to overlay digital information directly onto your view of the real world, creating an interactive, mixed-reality experience. This is achieved through transparent displays that project virtual objects, text, and images into your field of vision. Imagine seeing navigation arrows appear on the road ahead, virtual furniture placed in your living room, or interactive instructions floating over a piece of machinery – all while still clearly seeing your physical surroundings. AR glasses require a display element (waveguide, micro-OLED, or HUD), spatial tracking, and a UX that blends real and virtual imagery while keeping the user’s sightline usable and safe. They’re optimized for spatial interaction, navigation, hands-free guidance, and workflows that need persistent visual cues.

Key features of AR smart glasses include:

●Transparent Optical Displays: Projecting digital content onto the lenses, making it appear as if it's part of the real world.

●Environmental Sensors: Cameras, depth sensors, and accelerometers to map the environment, track head movements, and understand spatial relationships.

●Advanced Graphics Processing: To render complex 3D models and animations smoothly.

●Gesture Recognition: Allowing users to interact with virtual objects through hand movements.

●Spatial Audio: Creating the illusion that virtual sounds are coming from specific locations in the real world.

AI Smart Glasses vs. AR Smart Glasses: What’s the Difference?

This is the crucial distinction. While there is overlap (many AR glasses have powerful AI, and AI glasses can have simple AR features), their primary focus separates them.

| Dimension | AI smart glasses | AR smart glasses |

| Primary value | Intelligent tasks, context understanding, assistant actions (answering, summarizing, capturing). | Visual augmentation: overlays, HUD, navigation, spatial UI. |

| Display | Often none or very minimal (camera & audio first). Better battery life. | Requires display tech (waveguides/microdisplays). Heavier; draws more power. |

| Interaction style | Voice, touch, camera triggers; AI pipelines (on-device + cloud). Xiaomi | Visual gestures, wrist controllers, eye/head tracking, hand tracking. Tom's Guide |

| Typical use cases | Hands-free calls, live captions, visual search, photography assistant, accessibility. MetaXiaomi | Wayfinding, step-by-step maintenance, surgical overlays, spatial annotations. Tom's Guide |

| Privacy risks | Camera + always-listening mics → concerns about recording, data handling. | Same as AI glasses, amplified by visible overlays that may record/label what others see. |

| Form Factor | Typically sleek, lightweight, and designed to look normal. | Often bulkier to accommodate display tech and batteries. |

| Price Point | More affordable (often $300 - $700). | Expensive (often $500 - $3,500+ for consumer-ready tech). |

Xiaomi AI Glasses vs. Ray-Ban Meta AI Glasses: A Closer Look

These two products offer excellent examples of the AI-focused smart glasses category, showcasing different design philosophies and features.

| Feature | Xiaomi AI Glasses | Ray‑Ban Meta AI Glasses | Practical impact |

| Chipset | Snapdragon AR1 + BES2700 co‑processor | Snapdragon AR1 Gen 1 | Dual‑chip approach helps efficiency and standby |

| Camera & capture | 12 MP, 2K/30 fps, up to ~45 min continuous video | 12 MP, ~1080p video, clips limited to ~60s | Xiaomi favors longer recording; Meta favors short social clips10 |

| Battery | ~263 mAh; ~8.6 hrs mixed use; ~45 min full charge | ~4 hrs typical; case adds multiple recharges | Xiaomi prioritizes on‑glasses endurance; Meta uses case strategy11 |

| Lenses & weight | ~40 g; optional electrochromic lenses | ~49–51 g; Ray‑Ban frames and finishes | Xiaomi adds instant tint; Meta leans on iconic fashion11 |

| AI & ecosystem | Hyper XiaoAI; QR payment (Alipay), live translation | Meta AI voice, social integrations (IG/FB), live translation | Region/ecosystem fit: China vs. global Meta services11 |

| Price (launch) | From ~¥1,999 (~$280) | From ~$299 | Similar price bands with regional availability differences8 |

What is Meta Celeste (codename Hypernova) AR smart glasses?

“Celeste” (often reported under internal codenames like Hypernova) is Meta’s next-generation smart glasses project. Unlike the Ray-Ban Meta AI Glasses (which are more of a "smart assistant in glasses" product), Celeste is believed to represent Meta's ambition to create a pair of AR glasses that deliver on the promise of seamlessly blending digital content into the physical world with a full, immersive display. Reports suggest a target price around $800, limited early volumes, and an unveiling around Meta Connect 2025, with shipping potentially in October.While details remain under wraps and are largely based on leaks, patents, and developer insights, the speculated features and goals of Meta Celeste include:

●Advanced Optical Waveguide Displays: For high-resolution, wide field-of-view AR visuals that are truly transparent.

●Sophisticated Tracking and Mapping: To understand the user's environment with extreme precision, allowing virtual objects to interact realistically with real-world surfaces.

●Onboard Processing: Powerful enough to render complex 3D graphics and run AI algorithms for environmental understanding without constant reliance on a tethered device.

●Eye Tracking: For foveated rendering (optimizing display resolution where the user is looking) and intuitive interaction.

●Electromyography (EMG) Input: Non-invasive sensors that can detect subtle nerve signals from the wrist, allowing for ultra-fine motor control and interaction with virtual objects without visible hand gestures. This is a key area of Meta's research.

●Sleek, Everyday Wearable Design: The ultimate goal is for these to look as close to regular glasses as possible, making AR accessible and socially acceptable.

Trends Shaping AI and AR Smart Glasses

AI‑first mainstreaming: Smart glasses paired with multimodal AI assistants are becoming the “ideal interface” for hands‑free, context‑aware computing—translation, object recognition, note‑taking, and proactive prompts without screens dominating your day.

●Convergence: hybrid devices. The fastest-selling products will likely blend AI assistants + modest AR overlays (HUD snippets) rather than extreme endpoints (AI-only vs full-AR). Manufacturers want the best UX per watt; hybrid UX balances battery life and capability.

●App ecosystems & developer toolchains: Meta’s push to open developer tools and let glasses run “apps” (reported for Connect 2025) will be pivotal — software richness will determine platform stickiness. Expect more third-party utilities for productivity, translation, and vertical use cases (logistics, field service).

●Edge AI + selective cloud offload: To manage latency, privacy and connectivity, devices will do more inference on device for common tasks (hotwording, face blur, quick translations) and use cloud for heavy models (complex reasoning, multimodal generation). Snapdragon XR and similar chipsets will be common.

●Verticalization — not just consume: Enterprise AR (maintenance, surgery, logistics) will continue to lead in revenue per unit even as consumer devices scale. Enterprise customers tolerate higher price points when ROI (reduced errors, faster training) is clear.

●Price compression and form-factor innovation: Competition from Xiaomi and other value players will push down entry prices while flagship makers (Meta, Apple if/when it enters) push UX and display quality. Expect incremental improvements in waveguide optics, power-efficient microdisplays, and battery chemistry.

●Growth signals: Industry trackers note >30 AI glasses launches in a year, with projections of multi‑million annual units by the end of the decade as displays, batteries, and agents mature.

Conclusion

Ultimately, the distinction between "AI smart glasses" and "AR smart glasses" is likely to blur. The ideal future smart glass will be a holistic device that seamlessly integrates both advanced AI capabilities and immersive AR displays. It will understand your world, anticipate your needs, provide intelligent assistance, and overlay helpful digital information, all while looking like a stylish pair of everyday glasses. This convergence represents the true potential of these technologies to reshape how we interact with information and the world around us. As a world-leading LiPo battery manufacturer, Grepow specializes in producing custom-shaped LiPo batteries featuring advanced silicon-carbon anode technology. This innovation significantly increases energy density while reducing overall weight — a critical advantage for weight-sensitive applications such as wearable and medical devices. For products like AI and AR smart glasses, where both battery weight and runtime are key performance factors, Grepow offers fully customizable solutions tailored to the device’s form factor. By maximizing available space and optimizing design, Grepow helps manufacturers extend battery life, reduce device weight, and enhance overall product performance. If you have any questions or needs, please feel free to contact us at info@grepow.com.

Related Articles

-

Grepow to Showcase Custom Medical Battery Innovations at Medical Taiwan 2025

2025-05-21 -

Join Grepow at the 2025 Global Sources Hong Kong Show

2025-04-08 -

Exploring AI Smart Rings at CES 2025: A Deep Dive into Battery Technology

2025-01-23